Designing vSAN clusters with a failures to tolerate (FTT) value of 2 allows increased redundancy as well as reduced risk, especially during maintenance windows. However, that increase in redundancy comes at a storage cost. In all-flash solutions, that storage cost can be somewhat offset by leveraging vSAN RAID 6 (Erasure Coding) policies. When designing clusters with an FTT=2 using RAID 6 policies, there are several aspects to consider:

What is vSAN RAID 6 Erasure Coding?

RAID 6 is a type of RAID that leverages 4 data bits with 2 parity bits. VMware vSAN uses erasure coding (a term that means to encode and partition data into fragments) to split data into the 4 data and 2 parity components. Those computations of erasure coding have a performance impact, and that is why VMware requires all flash storage in order to use RAID 6 erasure coding policies. The use of erasure coding affords a considerable capacity improvement. For example, when using RAID 1 for an FTT=2 policy, the storage multiplier is 3x – a 100GB VM will consume 300GB. With RAID 6, the storage multiplier is only 1.5x. That same 100GB VM will only consume 150GB. Check out the Virtual Block’s blog for some great info on the use of erasure coding in vSAN.

vSAN RAID 6 (Erasure Coding) Requirements

If you want to leverage RAID 6 erasure coding polices, the following requirements must be met:

- vSAN Advanced license

- The hosts in the cluster must use all-flash storage

- The cluster must have at least 6 hosts

- On-disk format version 3 or later for RAID 5/6 polices

Additional information on vSAN RAID 6 requirements can be found here.

Physical Host Layout

Before getting into vSAN configuration specifics, it is best practice to separate hosts physically. This is commonly accomplished by splitting hosts in a cluster among multiple racks. Those racks should be spread out as much as is possible. The only requirement is a sub-1ms RTT between hosts in the cluster. Given that using RAID 6 erasure coding policies have a minimum 6 host requirement, it would be ideal to split a cluster into 6 or more racks.

Use of Fault Domains

vSAN uses what is referred to as fault domains to represent the physical grouping of hosts. In many cases, one fault domain represents one rack of hosts. VMware’s documentation for managing fault domains on vSAN is very helpful, and I recommend giving it a read. However, that post indicates the formula for achieving FTT=2 with fault domains uses the formula 2*n+1 where n is the desired FTT value. Using that formula would indicate that 5 fault domains would be enough to satisfy the requirement. That formula does not hold true for RAID 6 policies. If you refer back to the the 6 components (4 data + 2 parity) used in RAID 6 policies, vSAN will distribute those components across the configured fault domains. In short, if you want to leverage fault domains with RAID 6 erasure coding policies, there must be at least 6 fault domains. If the cluster being designed has exactly 6 nodes, or an odd number of nodes, there is no drawbacks to putting only one host into a fault domain (even if other fault domains in the same cluster have more than one host). Although the use of fault domains is recommended, they are not required. RAID 6 policies will also work with no fault domains configured.

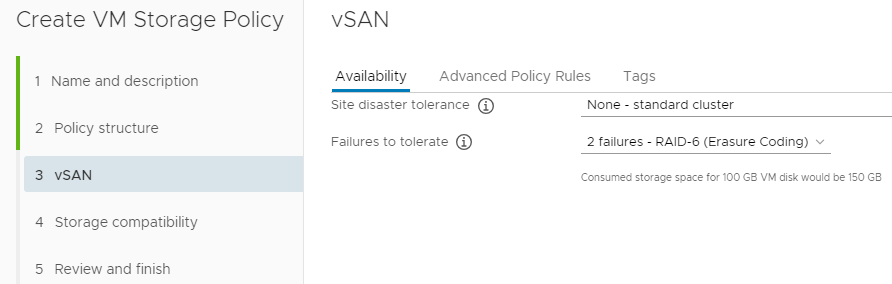

Configuring the RAID 6 Storage Policy

At this point, the only remaining piece of the “puzzle” is to set up the SPBM policy for RAID 6. Assuming everything is set up correctly, this will be the easiest part. Simply create a new Storage Policy and select “2 failures – RAID-6 (ErasureCoding)” for the failures to tolerate option under vSAN rules.

The next screen will show all of the vSAN datastores in your environment that are compatible with the RAID 6 policy. If you do not see the proper datastore in this list, change the drop down to show incompatible clusters, and hover over the incompatibility reason. If you see an error similar to “VASA provider is out of resources to satisfy this provisioning request”, or see symptoms outlined in VMware KB 2065479, verify that you have a proper fault domain count, and that they are configured properly.